原文摘要

Ollama has been one of my favorite ways to run local models for a while - it makes it really easy to download models, and it's smart about keeping them resident in memory while they are being used and then cleaning them out after they stop receiving traffic.

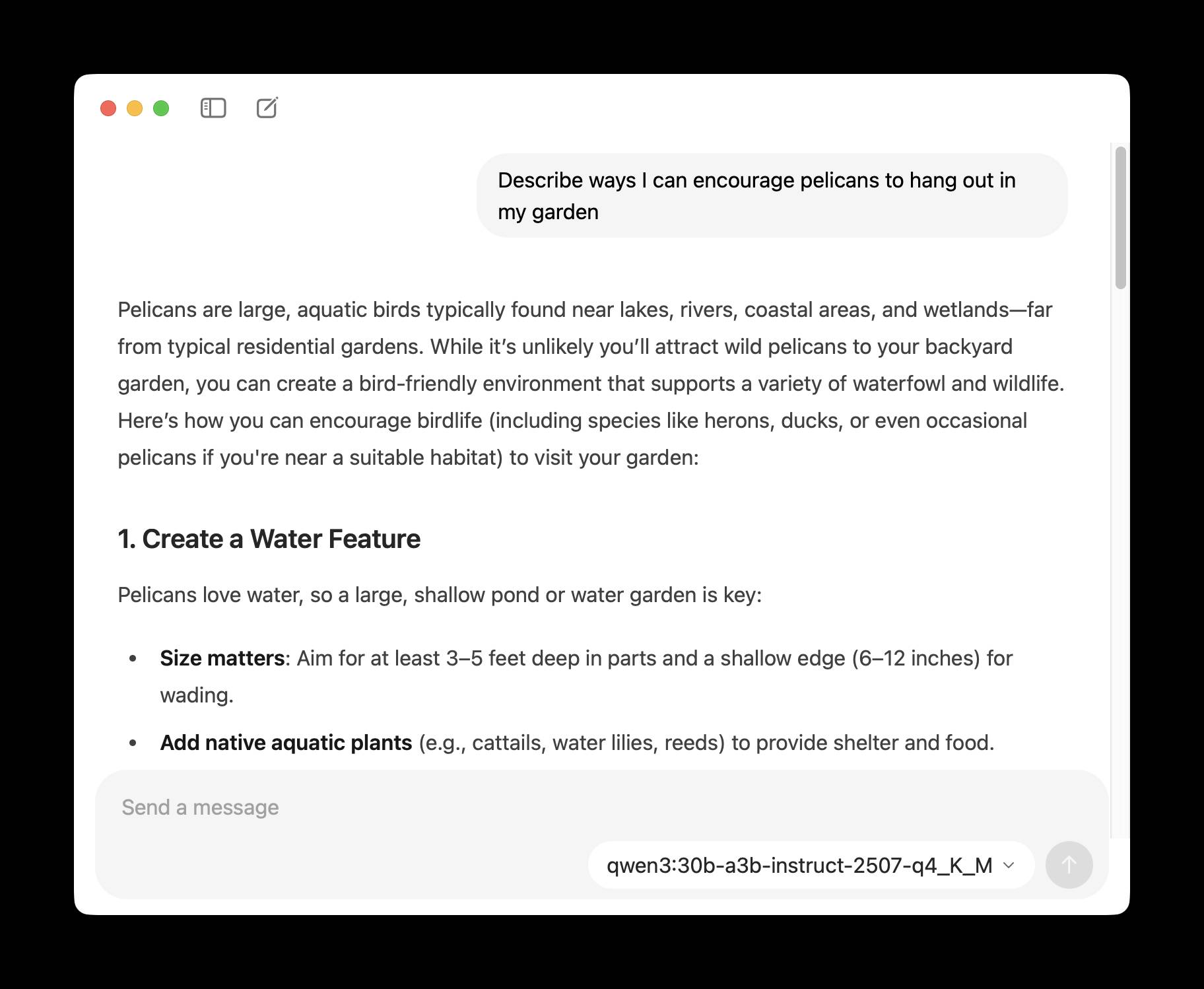

The one missing feature to date has been an interface: Ollama has been exclusively command-line, which is fine for the CLI literate among us and not much use for everyone else.

They've finally fixed that! The new app's interface is accessible from the existing system tray menu and lets you chat with any of your installed models. Vision models can accept images through the new interface as well.

Via Hacker News

Tags: ai, generative-ai, local-llms, llms, ollama

[原文链接](https://simonwillison.net/2025/Jul/31/ollamas-new-app/#atom-everything)