原文摘要

OpenAI's gold medal performance on the International Math Olympiad

This feels notable to me. OpenAI research scientist Alexander Wei:

I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

We evaluated our models on the 2025 IMO problems under the same rules as human contestants: two 4.5 hour exam sessions, no tools or internet, reading the official problem statements, and writing natural language proofs. [...]

Besides the result itself, I am excited about our approach: We reach this capability level not via narrow, task-specific methodology, but by breaking new ground in general-purpose reinforcement learning and test-time compute scaling.

In our evaluation, the model solved 5 of the 6 problems on the 2025 IMO. For each problem, three former IMO medalists independently graded the model’s submitted proof, with scores finalized after unanimous consensus. The model earned 35/42 points in total, enough for gold!

HUGE congratulations to the team—Sheryl Hsu, Noam Brown, and the many giants whose shoulders we stood on—for turning this crazy dream into reality! I am lucky I get to spend late nights and early mornings working alongside the very best.

Btw, we are releasing GPT-5 soon, and we’re excited for you to try it. But just to be clear: the IMO gold LLM is an experimental research model. We don’t plan to release anything with this level of math capability for several months.

(Normally I would just link to the tweet, but in this case Alexander built a thread... and Twitter threads no longer work for linking as they're only visible to users with an active Twitter account.)

Here's Wikipedia on the International Mathematical Olympiad:

It is widely regarded as the most prestigious mathematical competition in the world. The first IMO was held in Romania in 1959. It has since been held annually, except in 1980. More than 100 countries participate. Each country sends a team of up to six students, plus one team leader, one deputy leader, and observers.

This year's event is in Sunshine Coast, Australia. Here's the web page for the event, which includes a button you can click to access a PDF of the six questions - maybe they don't link to that document directly to discourage it from being indexed.

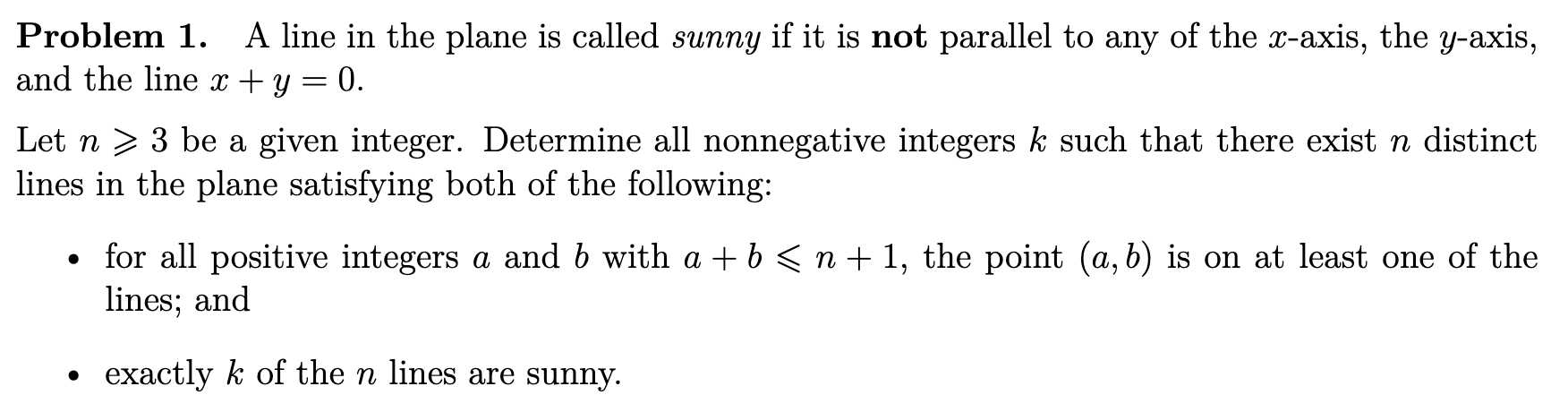

The first of the six questions looks like this:

Alexander shared the proofs produced by the model on GitHub. They're in a slightly strange format - not quite MathML embedded in Markdown - which Alexander excuses since "it is very much an experimental model".

The most notable thing about this is that the unnamed model achieved this score without using any tools. OpenAI's Sebastien Bubeck emphasizes that here:

Just to spell it out as clearly as possible: a next-word prediction machine (because that's really what it is here, no tools no nothing) just produced genuinely creative proofs for hard, novel math problems at a level reached only by an elite handful of pre‑college prodigies.

There's a bunch more useful context in this thread by Noam Brown, including a note that this model wasn't trained specifically for IMO problems:

Typically for these AI results, like in Go/Dota/Poker/Diplomacy, researchers spend years making an AI that masters one narrow domain and does little else. But this isn’t an IMO-specific model. It’s a reasoning LLM that incorporates new experimental general-purpose techniques.

So what’s different? We developed new techniques that make LLMs a lot better at hard-to-verify tasks. IMO problems were the perfect challenge for this: proofs are pages long and take experts hours to grade. Compare that to AIME, where answers are simply an integer from 0 to 999.

Also this model thinks for a long time. o1 thought for seconds. Deep Research for minutes. This one thinks for hours. Importantly, it’s also more efficient with its thinking. And there’s a lot of room to push the test-time compute and efficiency further.

It’s worth reflecting on just how fast AI progress has been, especially in math. In 2024, AI labs were using grade school math (GSM8K) as an eval in their model releases. Since then, we’ve saturated the (high school) MATH benchmark, then AIME, and now are at IMO gold. [...]

When you work at a frontier lab, you usually know where frontier capabilities are months before anyone else. But this result is brand new, using recently developed techniques. It was a surprise even to many researchers at OpenAI. Today, everyone gets to see where the frontier is.

<p>Tags: <a href="https://simonwillison.net/tags/mathematics">mathematics</a>, <a href="https://simonwillison.net/tags/ai">ai</a>, <a href="https://simonwillison.net/tags/openai">openai</a>, <a href="https://simonwillison.net/tags/generative-ai">generative-ai</a>, <a href="https://simonwillison.net/tags/llms">llms</a>, <a href="https://simonwillison.net/tags/llm-reasoning">llm-reasoning</a></p>

进一步信息揣测

- 实验性模型与商用产品的差距:OpenAI明确表示,虽然实验性模型在IMO中表现优异(达到金牌水平),但GPT-5短期内不会发布具备同等数学能力的版本,暗示研究模型与商业化产品之间存在技术或资源限制的鸿沟。

- 非公开训练方法:模型通过“通用强化学习”和“测试时计算扩展”实现突破,而非针对数学竞赛的专项优化,但具体技术细节(如强化学习框架、计算资源规模)未公开,可能涉及核心商业机密。

- 评分流程的隐蔽规则:模型答案由3位IMO奖牌得主独立评分并达成共识,但未披露评分标准细节(如容错率、部分分规则),实际可能存在对AI输出的特殊宽松处理(如自然语言证明的模糊性)。

- 数据获取的灰色操作:2025年IMO题目未直接公开PDF链接,仅通过页面按钮提供,可能是为了规避搜索引擎抓取,而OpenAI却能提前获取题目用于测试,暗示机构与竞赛组织方存在非公开合作。

- 输出格式的局限性:GitHub发布的证明格式“非标准”(混合MathML与Markdown),暴露了模型在数学符号结构化输出上的技术不成熟,实际应用可能需额外后处理。

- 无工具限制的潜在水分:虽然强调模型未使用外部工具,但未说明是否依赖预训练数据中的类似题目或解题模板,可能通过数据泄露(如往届IMO题)间接“记忆”而非纯粹推理。

- 团队协作的隐藏成本:提及“深夜和清晨工作”暗示高强度人工干预(如模型微调、问题筛选),实际成果可能依赖人类专家隐性兜底,非纯AI自主完成。

- 延迟发布的商业策略:明确推迟数学能力上线时间,可能是为避免冲击教育市场(如数学辅导行业)或为付费高阶功能(如数学专业版API)预留窗口。